From Prototype to Production-Ready AI Agents with Amazon Bedrock AgentCore

Agents are among the most dynamic frontiers in AI today. They integrate planning, iterative loops, and self-reflection, while also leveraging external tools, APIs, and function calls to move beyond the static outputs of traditional zero-shot prompting toward more general-purpose problem solving. Gartner predicts that more than 40% of agentic AI projects will be cancelled by 2027 due to cost overruns, unclear business value, or insufficient risk management. At the same time, Gartner forecasts that by 2028, nearly 15% of day-to-day work decisions will be made autonomously by agentic AI, and that one-third of enterprise software will embed such capabilities. This tension between high failure rates and rapid adoption underscores the need for enterprise-ready agentic AI. Amazon Bedrock Agent Core addresses this need by providing the foundation to build production-ready agents that can scale securely across the enterprise.

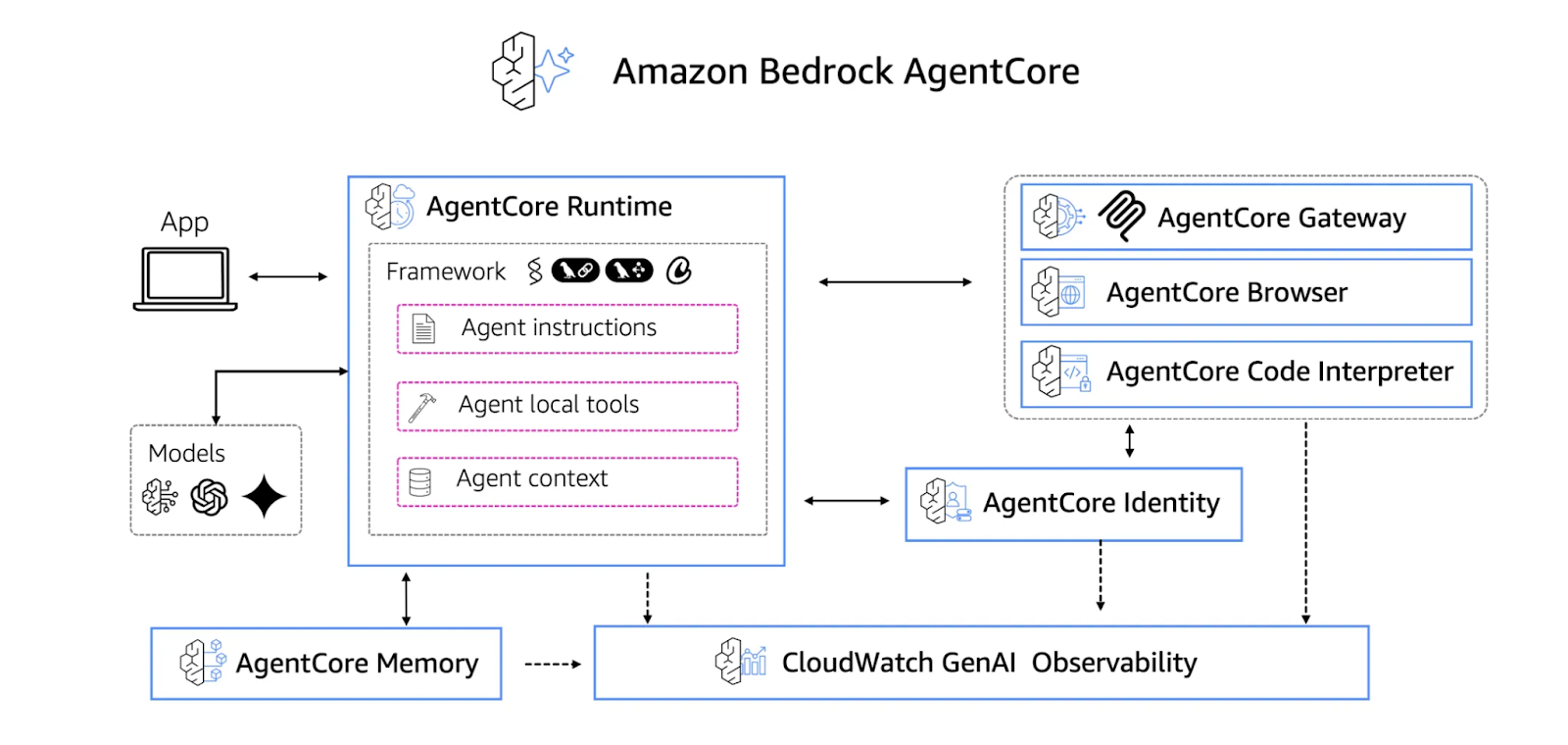

What is Amazon Bedrock AgentCore?

Amazon Bedrock AgentCore is an agentic platform to build, deploy, and operate highly capable agents securely at scale [1]. As part of Amazon Bedrock, AWS’s fully managed service for building and scaling generative AI applications without managing infrastructure, Agent Core extends these capabilities to agentic systems.

While experimentation and proofs of concept are valuable, they only deliver real business impact once agents are operational in production. It is this transition into production that introduces significant hurdles, including security, scalability, interoperability across heterogeneous agents, handling large payloads, and supporting long-running processes. Agent Core directly addresses these challenges by providing an enterprise-grade environment that unifies runtime execution, communication, state management, identity, and observability. It is framework- and model-agnostic, allowing organizations to use their preferred tools such as CrewAI, Strands, or LangGraph, and foundation models ranging from open source to providers like OpenAI, Google Gemini, or Anthropic (even those not available in Amazon Bedrock’s base model API).

How Amazon Bedrock Agent Core Services Work Together

Amazon Bedrock AgentCore is not a single component but a set of integrated services. From a developer’s perspective, working with Agent Core feels less like configuring isolated features and more like composing a set of building blocks that follow the natural lifecycle of an agent. The primary services are: Runtime, Identity, Memory, Gateway, Code Interpreter, Browser, and Observability.

Runtime

The Runtime service is the execution backbone. It gives a secure, serverless, and isolated environment where agents can be run with enterprise-grade guarantees. Minor code modifications allow for agent deployment into a managed environment within minutes.

AgentCore Runtime is designed to run with any Large Language Model (LLM), including models available through Amazon Bedrock as well as external providers such as OpenAI and Google Gemini. Agents interact with other agents and tools using the Model Context Protocol (MCP), enabling standardized communication across ecosystems.

Runtime supports both real-time interactions and long-running workloads of up to eight hours, with the ability to process payloads of up to 100 MB across multiple modalities, including text, images, audio, and video. Each session is executed inside a dedicated microVM with isolated CPU, memory, and filesystem resources. Once the session ends, the microVM is terminated and fully sanitized, providing deterministic security guarantees even when operating over inherently non-deterministic AI processes.

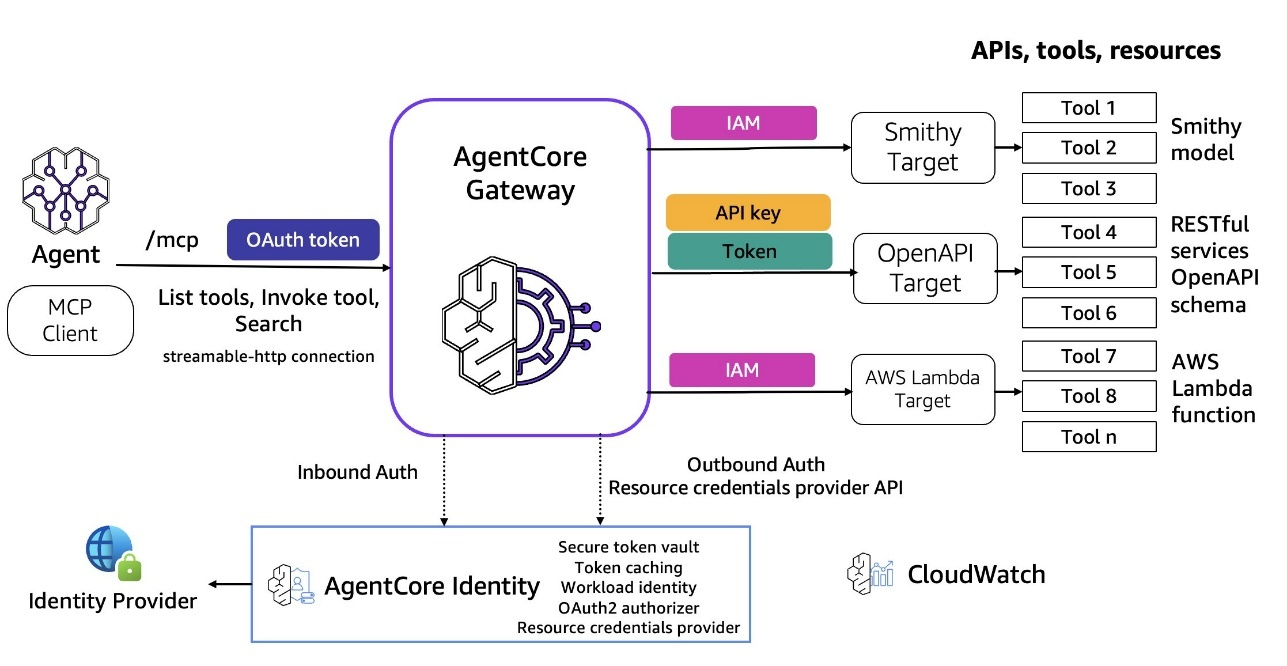

Identity

Amazon Bedrock AgentCore Identity is an identity and credential management service that allows agents and tools to access third-party services and AWS resources on behalf of users [2]. Credentials such as OAuth tokens, API keys, and client secrets are managed in a secure vault, so they never end up hardcoded or passed around in code. Identity also comes with a built-in support for OAuth 2.0 and popular providers like GitHub, Slack, Google, Zoom, Notion, HubSpot, etc.

Each agent has its own workload identity, represented by a unique ARN. Identities can be arranged in a hierarchy so administrators can group agents by directory or attribute and apply policies at different levels. This makes it easier to manage large numbers of agents while still keeping security controls precise and fine-grained. For the developers, Identity integrates directly with the AgentCore SDK. Simple annotations such as @requires_access_token or @requires_api_key handle credential retrieval, refresh, and injection automatically.

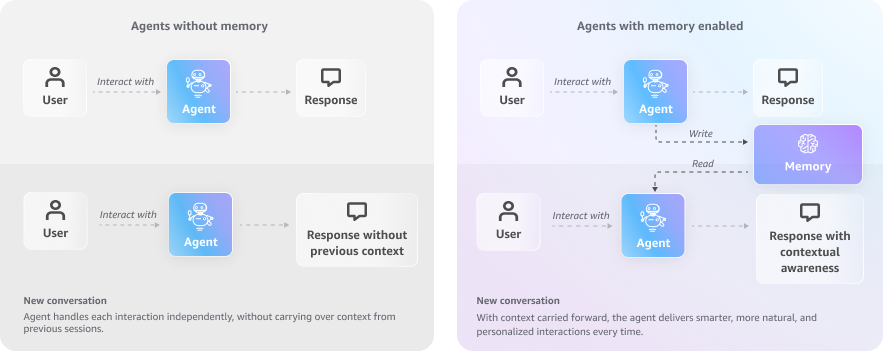

Memory

Amazon Bedrock AgentCore Memory gives agents the ability to retain and reuse context across sessions, conversations, and workflows. Instead of starting from scratch every time, agents can build on past interactions with both short-term memory (session context) and long-term memory (persistent knowledge across multiple sessions). Memory is fully isolated per session and per user, ensuring that sensitive information remains scoped correctly while still allowing agents to recall what’s relevant. It supports multiple modalities like text, images, audio, and more, so agents can reference richer context than just prompts. Memory also integrates natively with AgentCore Runtime and Gateway, making it easy to persist, retrieve, and update context without writing complex storage logic. There are also direct integrations with frameworks like LangChain and LangGraph.

A memory strategy defines how an agent stores and recalls past information. For instance, an agent might extract user preferences and maintain a profile that persists across sessions. Choosing the right strategy helps balance accuracy, performance, and cost depending on the use case. In AgentCore, memory can be configured in three ways: fully managed with built-in defaults, built-in with custom overrides, or entirely self-managed.

Gateway

The Gateway service connects agents to the outside world by turning APIs, AWS Lambda functions, and enterprise systems into agent-compatible tools. Instead of hardcoding integrations into the agent itself, Gateway acts as a broker: tools can be registered once and reused across multiple agents. This separation makes it easier to evolve integrations without redeploying or rewriting agents, reducing operational friction. Administrators can enforce authentication and fine-grained access control on every tool invocation, ensuring that agents interact with external services securely. At scale, Gateway simplifies interoperability across heterogeneous environments by standardizing how agents discover, call, and manage tools.

Code Interpreter

With those basics in place, capabilities can be layered on. The Code Interpreter extends an agent’s reasoning abilities by running Python or JavaScript securely inside a sandboxed environment. This enables agents to perform calculations, transform data, and generate outputs that go beyond the capabilities of an LLM alone. The execution is isolated at the microVM level, so code runs without exposing enterprise systems to risk. Developers benefit from a controlled environment where the agent can dynamically write and execute snippets in response to user input or workflow needs. By combining LLM reasoning with programmatic execution, the Code Interpreter bridges the gap between natural language understanding and precise computational logic.

Browser

The Browser service gives agents the ability to interact directly with web-based applications and content. Running in a secure, managed environment, Browser sessions allow agents to navigate pages, extract information, and trigger interactions at enterprise scale. This is particularly valuable for use cases where no structured API is available or where dynamic web data is required. Security boundaries are strictly enforced so browsing activity is isolated per session and per agent identity.

Observability

Observability underpins the entire AgentCore platform by making agent activity transparent and measurable. Every interaction, from tool calls to memory lookups and reasoning steps, can be traced, logged, and monitored in real time. This provides developers and operators with insights into agent decision-making, system performance, and potential errors. Built-in integration with AWS monitoring services enables correlation across agents and services, supporting compliance and audit requirements.

Why Agentic AI matters?

As Gartner projects, by 2029 agentic AI will autonomously resolve up to 80% of routine customer-service requests, potentially reducing operational costs by around 30% [3]. But Agentic AI is already shaping industries well beyond customer service. In finance, autonomous agents assist with fraud detection, market data analysis, and compliance monitoring. In healthcare, they support clinicians by automating administrative workflows and providing contextual insights from patient records. In software development, agents increasingly act as copilots, automating planning, implementation, and testing.

Surveys show that developers are enthusiastic about this shift: Salesforce’s State of IT report notes that over 90% of developers are optimistic about AI’s impact on their careers, and 96% expect it to improve their daily work [4]. This optimism underscores how quickly agentic AI is becoming part of the enterprise technology stack.

AgentCore helps organizations accelerate this adoption, focusing on time-to-value rather than just time-to-prototype.

What’s Next

Ready to turn Agentic AI from slideware into real outcomes? Several Clouds can blueprint your AWS Bedrock AgentCore architecture, set up identity and guardrails, and launch a measured pilot with clear KPIs, cost controls, and observability—so your first agent ships to production safely and fast.

Let’s talk about your use case today or learn more about our Generative AI services.

References

- Amazon Web Services. What is Amazon Bedrock AgentCore? AWS Documentation. https://docs.aws.amazon.com/bedrock-agentcore/latest/devguide/what-is-bedrock-agentcore.html

- Amazon Web Services. Overview of Amazon Bedrock AgentCore Identity. AWS Documentation. https://docs.aws.amazon.com/bedrock-agentcore/latest/devguide/identity-overview.html

- Amazon Web Services. Add memory to your Amazon Bedrock AgentCore agent. AWS Documentation. https://docs.aws.amazon.com/bedrock-agentcore/latest/devguide/memory.html

- Gartner, Inc. Gartner Predicts Agentic AI Will Autonomously Resolve 80% of Common Customer Service Issues Without Human Intervention by 2029. Press Release, March 5, 2025. https://www.gartner.com/en/newsroom/press-releases/2025-03-05-gartner-predicts-agentic-ai-will-autonomously-resolve-80-percent-of-common-customer-service-issues-without-human-intervention-by-20290

- Salesforce. Agentic AI & the Future of Developers: State of IT Survey. https://www.salesforce.com/news/stories/agentic-ai-developer-future-sentiment/

- Introducing Amazon Bedrock AgentCore Gateway: Transforming enterprise AI agent tool development, Dhawalkumar Patel, Kartik Rustagi, and Mike Liu, August 2025 https://aws.amazon.com/blogs/machine-learning/introducing-amazon-bedrock-agentcore-gateway-transforming-enterprise-ai-agent-tool-development/

Relevant Success Stories

Book a meeting

Ready to unlock more value from your cloud? Whether you're exploring a migration, optimizing costs, or building with AI—we're here to help. Book a free consultation with our team and let's find the right solution for your goals.

.png)

.png)