Choosing the Right Feature Store: Feast vs. Amazon SageMaker

Introduction

As machine learning systems mature, they encounter various challenges, including the development of multiple ML models sharing the same feature sets, the need for both real-time and batch inference capabilities, and the complexities of large-scale feature engineering. The feature stores address those issues by providing a centralized system for storing, managing and serving ML features both offline for training and online for inference. They have become an essential part of the infrastructure for ensuring consistency, reusability and scalability in ML workflows.

By maintaining a single source of truth for features, they eliminate duplication and prevent inconsistencies that can arise when different teams recompute features independently. This is critical for avoiding issues like poor reproducibility or training-serving skew, where models perform well during development but fail in production due to mismatched feature logic.

Two popular options are Feast, the open-source feature store, and Amazon SageMaker Feature Store, a fully managed service integrated within the AWS ecosystem. In this post, we compare both solutions across architecture, operational tradeoffs, and integration capabilities to help you determine the right fit for your ML platform.

Core components of a Feature Store

As a purpose-built system for managing and serving features for models, the feature store typically provides:

- Offline storage - stores historical feature values for model training and batch inference, optimized for large-scale analytical workloads. It also supports point-in-time correctness during backtesting and retraining.

- Online storage - serves precomputed features in real-time to production models in order to minimize inference latency and eliminate on-the-fly computation.

- Feature registry - provides a centralized catalog for feature definitions, schema versions, lineage and metadata. Serves as a single source of truth for feature schemas.

- Feature serving API - unified interface to fetch features for both training (offline) and inference (online). Often includes SDKs for integration with model training frameworks (PyTorch, TensorFlow, etc.).

Amazon SageMaker Feature Store

Amazon SageMaker Feature Store is a fully managed, serverless feature store designed to help ingestion, storage, retrieval and sharing features efficiently across teams and ML models. Tightly integrated with Amazon SageMaker, AWS Identity and Access Management (IAM), Amazon S3, and other AWS services, it simplifies feature management while ensuring scalability and security.

One of its key advantages, as opposed to Feast, is the inclusion of built-in and managed online and offline stores, enabling organizations to serve low-latency features for real-time inference while maintaining historical data for training and batch processing. Additionally, it offers seamless integration with AWS’ suite of ML tools, including SageMaker Pipelines, SageMaker Studio, AWS Glue, Amazon Athena and more, allowing teams to streamline their workflows within a unified ecosystem. The platform also provides feature transformation capabilities [1] and managed security, monitoring, and lineage tracking, ensuring compliance and governance.

Amazon SageMaker Feature Store is particularly well-suited for organizations that operate entirely within AWS and prefer a fully managed, scalable and secure solution for feature storage. It is an excellent choice for teams looking to simplify their MLOps processes by leveraging SageMaker Pipelines and Studio for orchestration and development, as well as for businesses that require strict governance, auditing, and deep integration with AWS services. For example, a healthcare analytics company uses Amazon SageMaker Feature Store to manage patient risk scores and lab result features for predictive care models. Due to HIPAA and regulatory compliance, all data and infrastructure must remain within AWS-managed services to ensure proper encryption, auditability, and access control via IAM.

Feast (Feature Store)

Feast (Feature Store) is an open-source, cloud-agnostic feature store specifically designed to support real-time machine learning applications. Its modular architecture allows integration with multiple backend systems for both online and offline stores, offering flexibility across different deployment environments. A key advantage of Feast lies in its pluggable backends, which include options like BigQuery, Redshift, Snowflake, Redis, allowing teams to tailor the infrastructure to their specific needs. The platform is designed to seamlessly run on Kubernetes, in cloud environments, or on-premises. Feast integrates easily with custom machine learning tech stacks, making it ideal for organizations prioritizing adaptability. Compared to SageMaker’s feature store, Feast is still in development and has limited support for some functionalities such as feature transformations and feature monitoring (relies on 3rd party tools such as Evidently) [2].

Feast is particularly well-suited for teams operating in multi-cloud or hybrid environments, where real-time feature ingestion and serving are critical. It appeals to organizations who prefer open-source, modular components within their MLOps ecosystems and aim to build platform-agnostic ML infrastructure. For an example, a global e-commerce company operating across multiple clouds might choose Feast to power real-time personalization, leveraging its support for streaming data (e.g. current browsing behaviour streamed via Kafka and persisted into Elasticache Redis) and pluggable backends (e.g. historical purchase patterns queried from BigQuery and Snowflake).

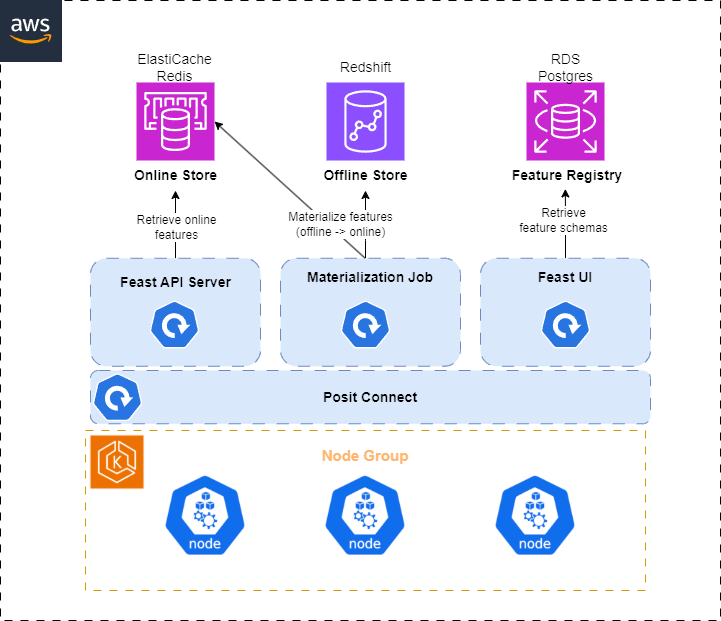

However, since Feast is not a managed service, teams are responsible for maintaining the underlying infrastructure. This includes configuring and securing the online and offline stores, setting up process orchestration for batch and streaming pipelines (e.g., using Airflow or Posit Connect), and implementing monitoring, observability, and access control. While this gives teams maximum flexibility and control, it also introduces operational overhead and a steeper learning curve—especially for organizations without mature DevOps or platform engineering practices. The following diagram shows an example architecture of running Feast’s core components in AWS :

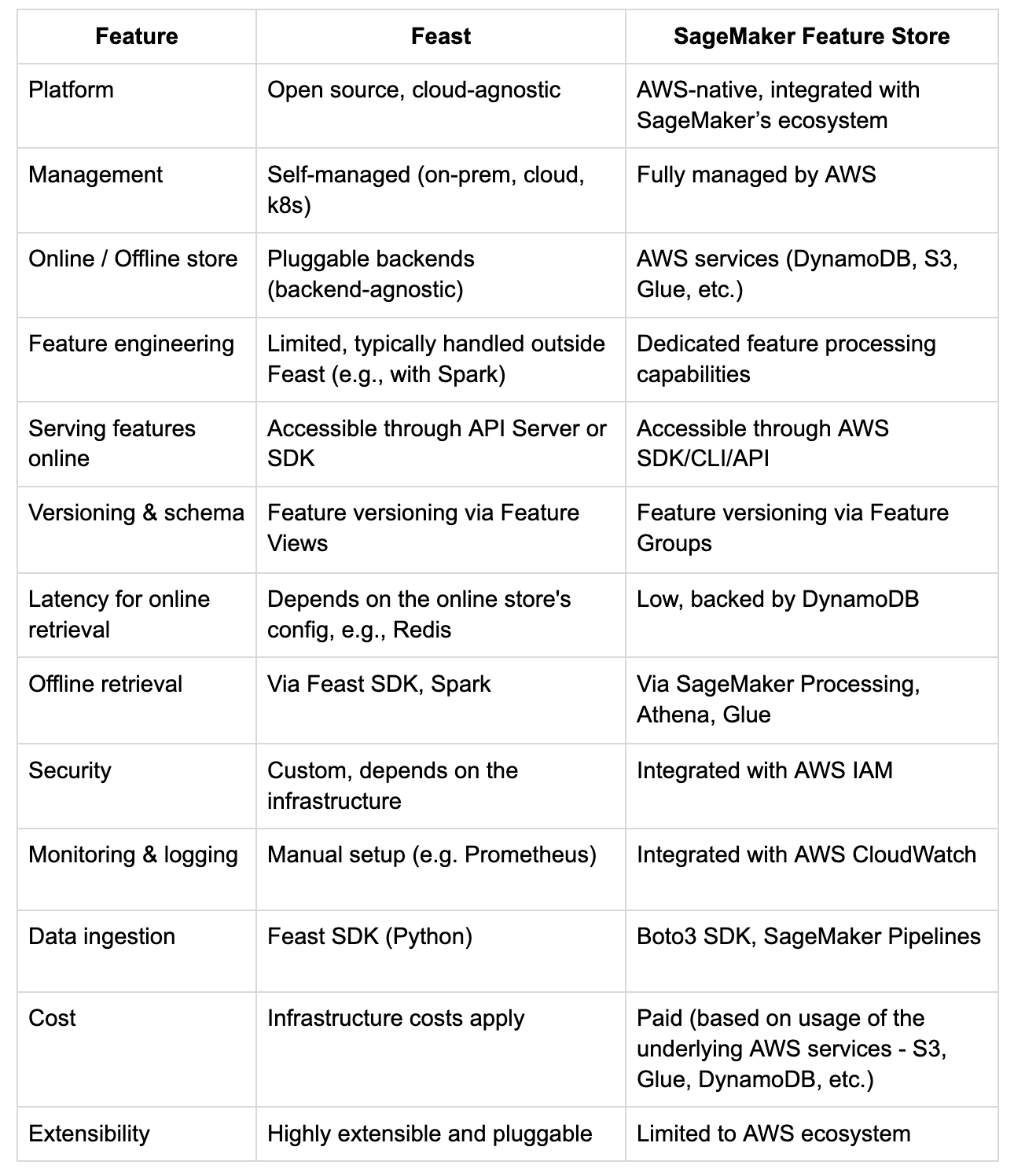

Table of comparison

The table below summarizes the main differences between both solutions, highlighting their key features, architectural differences, and suitability for various machine learning workflows.

Conclusion

Choosing the right feature store depends on the ML use case, team expertise and infrastructure strategy. For organizations with existing solutions within the AWS ecosystem, who need a secure, integrated, and managed experience, Amazon SageMaker Feature Store is a robust choice.

On the other hand, if you’re building a flexible, cloud-agnostic ML platform or require real-time serving across multiple environments, Feast offers the customization and modularity to support advanced MLOps pipelines. The trade-off is the requirement for self-management and support on the underlying resources.

Both solutions are changing constantly and can serve as foundational components in a scalable machine learning architecture.

References

[1] “Transform data into ML features using SageMaker Feature Store feature processing”, “AWS”, June 21, 2023

[2] Jakub Jurczak, “Feature Store comparison: 4 Feature Stores - explained and compared”, “getindata”, 6 June, 2022

(https://getindata.com/blog/feature-store-comparison-4-feature-stores-explained-compared/)

Relevant Success Stories

Book a meeting

Ready to unlock more value from your cloud? Whether you're exploring a migration, optimizing costs, or building with AI—we're here to help. Book a free consultation with our team and let's find the right solution for your goals.

.png)

.png)